I’m sure you’ve seen the short video clips made by OpenAI’s latest endeavor, Sora. In their words:

Sora is an AI model that can create realistic and imaginative scenes from text instructions.

I’m sure plenty of Hollywood execs salivated upon reading that. And yes, the clips OpenAI has shared sure look impressive.

At first glance.

has a series of great short posts in which it becomes clear that Sora’s clips ignore physics, biology, and even fundamentals such as object permanence. Much like how ChatGPT sounds impressive, Sora looks impressive, but after a bit of digging, we find out that it’s not too different from a horse doing tricks.But I’m not here to talk about the flaws of Sora. No, today, I want to write about the (predictable) fan response that washed over X. As you can imagine, Sora was/is heralded as the ultimate tool to create personalized media. The movie you always wanted to see is only a prompt away.

How. Dull.

How narcissistic.

How emotionally and intellectually lazy.

I don’t know about you, but I read books/watch movies to be challenged. I want to be exposed to new ideas, be shown new perspectives, and be forced to confront my assumptions. Where is the challenge in a movie (or book) that caters to your every desire and never forces you to flex your mental muscles? A recent (and admittedly hypothetical) study suggests that those metaphorical mental muscles - in this case, higher executive functions - might indeed atrophy as we begin to rely more and more on generative software tools (I still struggle to see them as actual AI).

Sure, we’ve been cognitively offloading for ages. Ever since humanity developed writing, the demands on our short-term memory have changed, as did the demands on the neural underpinnings of dexterity. The brain, in other words, responds (to an extent) to the demands, or lack thereof, placed on it. As a famous example, London cab drivers develop chonky hippocampi due to the navigational demands of their jobs — even in the age of GPS they have to pass a stringent navigation test known as ‘The Knowledge’ and this leaves traces in their brains.

But here is why the mindless cheering for friction-free personalized media worries me more than most other forms of cognitive offloading: exposure to fictional narratives, especially those that force you to see the story world through the eyes of a character unlike you, increases empathy and decreases prejudice1. Fiction literally changes your brain and it is an incredibly powerful tool to expand your inner horizons and become, well, more human(e). It is meant to be challenging, it is meant to surprise you, it is meant to make you rethink your worldview.

How likely is it that all those AI cheerleaders will explicitly prompt their (already very biased) tools to show them perspectives that might make them uncomfortable by confronting their biases? ‘Not very’ would be my guess.

Do we need a world with less empathy and more prejudice? Or could we all use some practice in seeing the world through each other’s eyes? Part of what makes fiction so powerful is that it allows us to build bridges, step into a shared world, and imagine better (as a noun and an adjective).

Loneliness is already a global burden and a lethal poison. Do we truly need AI tools to sever those tenuous connections we might build through fiction as well? It’s a shared world, in more ways than one.

I’m generally a tech optimist2 and I believe machine learning holds tremendous promise (even the generative software tools), but when I see how it’s being used by AI acolytes right now, I worry. There is something fundamentally human lacking in their push for tools that take jobs, fool people, and are mostly used for clickbait and scams. Not to mention the tremendous environmental cost. It doesn’t have to be this way.

Maybe the generative AI pushers need to read more fiction.

Stuff I’ve read: (Something new I wanted to try, let me know if it’s worthwhile.)

Against power: An essay on French thinker, philosopher, and revolutionary Sophie de Grouchy — an(other) incredible woman silenced in history.

Tech Strikes Back: A deep dive into the tech subculture of effective accelerationism.

Online images amplify gender bias: A study finds that “gender bias is consistently more prevalent in images than text ...“ Something to keep in mind as social media shifts more and more to the visual side.

I’ve written elsewhere about why you should probably read more fiction.

One of the very few areas in which I allow myself optimism (I know. I’m working on it). Being a tech optimist, however, does not mean barreling ahead blindly; that’s tech bro territory. For all their potential, new technologies are always embedded in a sociocultural context and ignoring their possible ripple effects is usually a recipe for unpleasantness.

Great perspective and well put: intellectual laziness. We've been chasing that for many decades in the name of 'convenience'. And cash, of course.

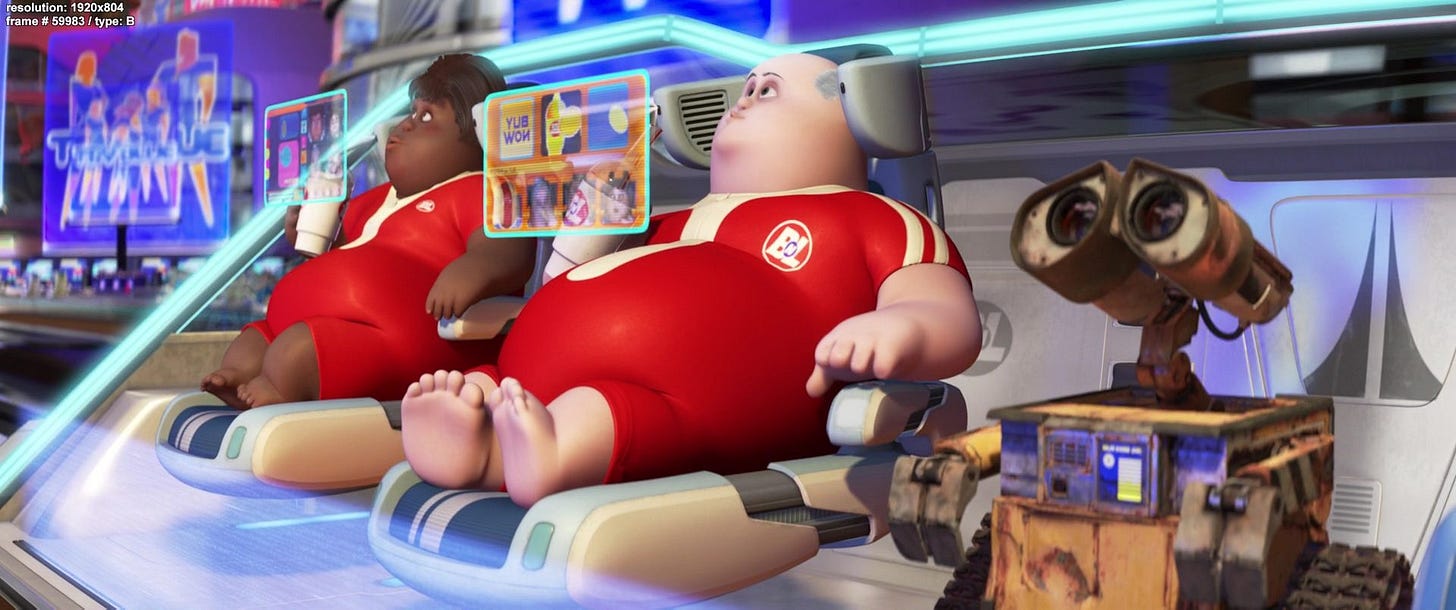

Definitely feeling like we’re on our way to WALL-E....

And I liked that new section on stuff you’re reading. I’ve bookmarked a bunch. 👍🏼